Some Surprising Findings About Learning in the Classroom

- The quality of the teacher doesn't affect how much students learn (that doesn't mean it doesn't affect other factors — e.g., interest and motivation).

- Low ability students learn just as much as high ability students when exposed to the same experiences.

- More able students learn more because they seek out other learning opportunities.

- Tests, more than measuring a student’s learning, reflect the student’s motivation.

I want to talk to you this month about an educational project that’s been running for some years here in New Zealand. The Project on Learning spent three years (1998-2000) studying, in excruciating detail, the classroom experiences of 9-11 year olds. The study used miniature videocameras, individually worn microphones, as well as trained observers, to record every detail of the experiences of individual students during the course of particular science, maths, or social studies units. The students selected were a randomly chosen set of four, two girls, two boys, two above average ability, two below average ability. 16 different classrooms were involved in the study.

On the basis of this data, the researchers came to a number of startling conclusions. Here are some of them (as reported by Emeritus Professor Graham Nuthall on national radio):

* that students learn no more from experienced teachers than they learn from beginning teachers

* that students learn no more from award-winning teachers than teachers considered average

* that students already know 40-50% of what teachers are trying to teach them

* that there are enormous individual differences in what students learned from the same classroom experiences — indeed, hardly any two students learned the same things

* that low ability students learn just as much as high ability students when exposed to the same experiences

This is amazing stuff!

We do have to be careful what lesson we draw from this. For example, I don’t think we should draw the conclusion that it doesn’t matter whether a teacher is any good or not. For a start, the study didn’t use bad teachers (personally, I had one university lecturer who actually put my knowledge of the subject into deficit — I started out knowing something about the subject (calculus), and by the time I’d spent several months listening to him, I was hopelessly confused). Secondly, there are lots of other aspects to the classroom experience than simply what the student learns from a particular study unit.

Nevertheless, the idea that a student learns as much from an okay teacher as from a great one, is startling. Here’s a quote from Professor Nuttall: “Teachers like the rest of us are concerned for student learning and assume that learning will flow naturally from interesting and engaging classroom activities. But it does not.” !

It’s not so surprising that different students learn different things from the same experiences — we all knew that — but we perhaps didn’t fully appreciate the degree to which that is true. But of course the most surprising thing is that low ability students learn just as much as high ability students when exposed to the same experiences. That, is no doubt the finding that most people will find hardest to believe. Clearly the more able students are learning more than the less able, so how does that work?

According to the researchers, “a significant proportion of the critical learning experiences for the more able students were those that they created for themselves, with their peers, or on their own. The least able students relied much more on the teacher for creating effective learning opportunities.”

This does in fact fit in with my own experiences: marveling at my son’s knowledge of various subjects, on a number of occasions I have questioned him about the origins of such knowledge. Invariably, it turns out that his knowledge came from books he had read at home, rather than anything he was taught at school. (And please believe I am not knocking my son’s schools or his teachers; I have been reasonably happy, most of the time, with these).

In this interview, Professor Nuthall mentioned another finding that has come out of the research — that tests, more than measuring a student’s learning, reflect the student’s motivation. “When a student is highly motivated to do the best they can on a test, then that test will measure what they know or can do. When that motivation is not there (as it is not for most students most of the time) then the test only measures what they can be bothered to do.”

Thought-provoking!

Professor Nuthall’s research studies were cited in the 3rd edition of the Handbook of Research on Teaching (the “bible” for teaching research) as one of the five or six most significant research projects in the world. The research team of Professor Nuthall and Dr Adrienne Alton-Lee (who invented the techniques used in the Project on Learning) was cited in the most recent edition as one of the leading research teams in the history of research on teaching.

[see below for some of the academic publications that report the findings of the Project on Learning (plus an early article on the techniques used in the Project)]

The wider picture

An OECD report on learning cites that, for more than a century, one in six have reported that they hate/hated school, and a similar number failed to achieve sufficient literacy and numeracy skills to be securely employable. The report asks the question: “Maybe traditional education as we know it inevitably offends one in six pupils?”

In a recent special report on education put out by CNN, it is claimed that, in the U.S., charter schools (publicly financed schools that operate largely independent of government regulation) now count nearly 700,000 students. And, most tellingly, recent figures put the number of children taught at home at more than a million, a 29% jump from 1999. (To put this in context, there are apparently some 54 million students in the U.S.).

One could argue that the rise in people seeking alternatives to a traditional education is a direct response to the (many) failings of public education, but this is assuredly a simplistic answer. Public education has always had major problems. At different times and places, these problems have been different, but a mass education system will never be suitable for every child. Nor can it ever, by its nature (basically a factory system, designed to instil required skills in as many children as possible), be the best for anyone.

Indeed, we are closer to a system that endeavors to approach students as individuals than we have ever been (we still have a long way to go, of course).

I believe the increased popularity of alternatives to public education reflects many factors, but most particularly, the simple awareness that there ARE alternatives, and the increased lack of faith in professionals and experts.

Impaired reading skills are found in some 20% of children. No educational system in the world has mastered the problem of literacy; every existing system produces an unacceptably high level of failures. So, we cannot point to a particular program of instruction and say, this is the answer. Indeed, I am certain that such an aim would be foredoomed to failure - given the differences between individuals, how can anyone believe that there is some magic bullet that will work on everyone?

Having said that, we have a far greater idea now of the requirements of an effective literacy program. [see Reading and Research from the National Reading Panel]

Project on Learning references

- Nuthall, G. A. & Alton-Lee, A. G. 1993. Predicting learning from student experience of teaching: A theory of student knowledge acquisition in classrooms. American Educational Research Journal, 30 (4), 799-840.

- Nuthall, G. A. 1999. Learning how to learn: the evolution of students’ minds through the social processes and culture of the classroom. International Journal of Educational Research, 31 (3), 139 – 256.

- Nuthall, G. A. 1999. The way students learn: Acquiring knowledge from an integrated science and social studies unit. Elementary School Journal, 99, 303-341.

- Nuthall, G. A. 2000. How children remember what they learn in school. Wellington: New Zealand Council for Educational Research.

- Nuthall, G. A. 2001. Understanding how classroom experiences shape students’ minds. Unterrichtswissenschaft: Zeitschrift für Lernforschung, 29 (3), 224-267.

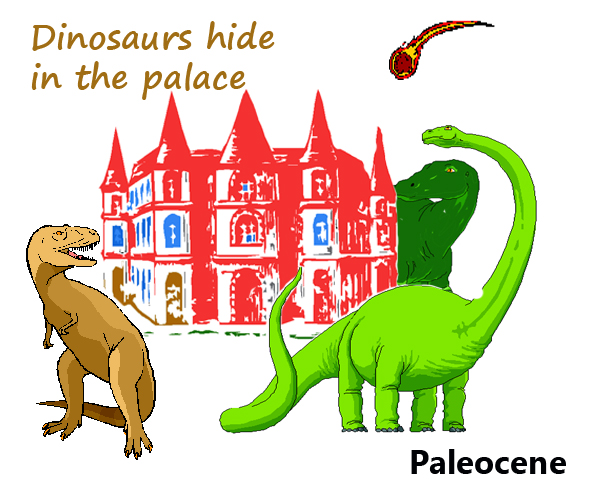

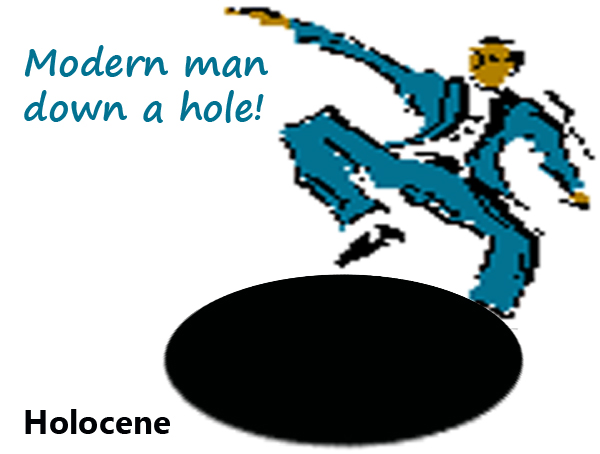

Instead of “Holograms are very recent”, you might want to form an image of someone falling into a hole (tying the Holocene to the “Age of Humans”).

Instead of “Holograms are very recent”, you might want to form an image of someone falling into a hole (tying the Holocene to the “Age of Humans”).

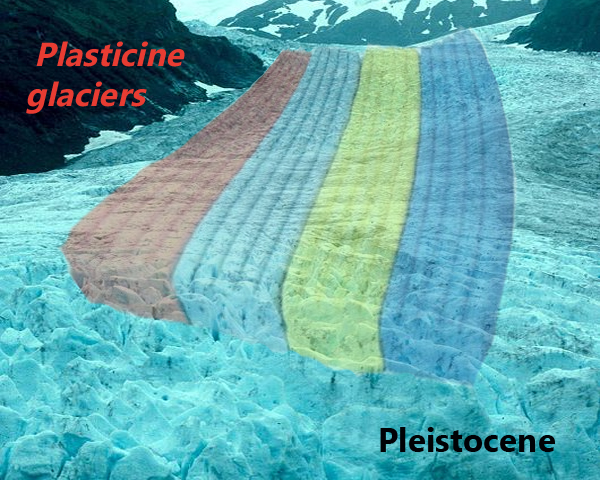

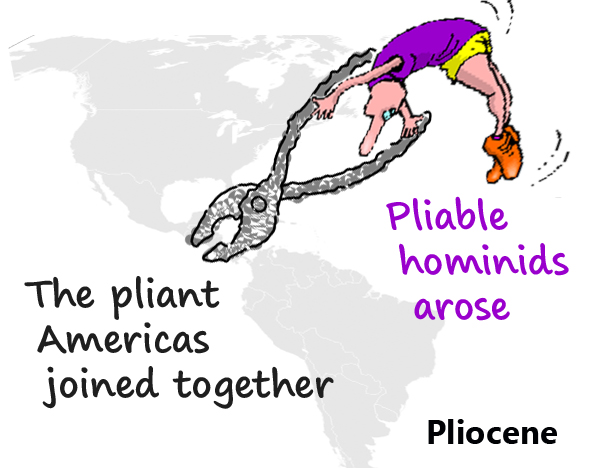

If you can visualize very limber (perhaps in distorted postures) ape-like humans, Pliable hominids might be satisfactory, or you may need to fall back on the pliers — perhaps an image of pliers bringing North and South America together.

If you can visualize very limber (perhaps in distorted postures) ape-like humans, Pliable hominids might be satisfactory, or you may need to fall back on the pliers — perhaps an image of pliers bringing North and South America together. Mild weather isn’t terribly imageable; you might like to imagine milk pouring from the joint where Africa and Eurasia have collided.

Mild weather isn’t terribly imageable; you might like to imagine milk pouring from the joint where Africa and Eurasia have collided. Oligarchs is likewise difficult, but you could visualize elephants under olive trees, eating the olives.

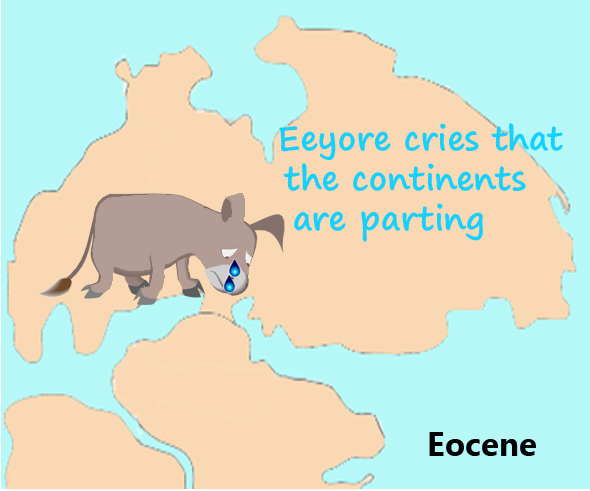

Oligarchs is likewise difficult, but you could visualize elephants under olive trees, eating the olives. And now of course, we come to the most difficult — the Eocene. Here’s a thought, for those brought up with Winnie the Pooh. If you have a clear picture of Eeyore, you could use him in this image. Perhaps Eeyore is standing on one part of the separating Laurasia (looking appropriately disconsolate).

And now of course, we come to the most difficult — the Eocene. Here’s a thought, for those brought up with Winnie the Pooh. If you have a clear picture of Eeyore, you could use him in this image. Perhaps Eeyore is standing on one part of the separating Laurasia (looking appropriately disconsolate).